Technological hope

2007 was every bit of weird. The year before the global economic crisis which repercussions we are still hearing today. The year when the first iPhone hit the shelves and reshaped our daily lives. Facebook went global in 2007, Twitter sky-rocketed and fracking began. Android was released. However, the coolest thing about it was no one could really relate to those technological leaps. We just knew something was happening but there was no way we could find out about those advancements apart from a personal use. There were no YouTube vloggers with millions of followers explaining what’s happening. No hashtags as a primary source of news. No SoundCloud rappers and no ‘flexing’ for God’s sake. Let’s look at it again:

In 2007 fast internet was there, smartphones were there, social networks with no other purpose than letting people meet online, and yet, we did not use it all for servicing political or commercial interests.

There was an understanding that technology will change it all, but either people in power resisted to it, neglected it considering digital data and channels “not serious”, or simply did not realize the magnitude of the impact digital globalization has.

Image credit: Akshar Pathak

Humanity has a long history of putting its greatest inventions to use in the most dreadful ways.

Take gunpowder for example. Before it became gunpowder, it was a generally a medical substance, that alchemists called fire medicine (“huoyao” 火藥). It was a side result in the search for longevity increasing drugs. Quickly it became an entertainment tool and after a fair amount of playing and experimenting with formulas, proportions, and quantities, the black powder’s violent potential was unlocked.

First, it came in the names of the weaponry that though not being technologically there yet, was already in the minds of some of our opportunistic ancestors. Here are just a few of those names: “flying incendiary club for subjugating demons,” “caltrop fireball,” “ten-thousand fire flying sand magic bomb,” “big bees nest,” “burning heaven fierce fire unstoppable bomb,” “fire bricks” which released “flying swallows,” “flying rats,” “fire birds,” and “fire oxen”.

From there on, the world was not the same. In its quest for longer life, we came up with the quickest way to take it. And we enjoyed a lot of fireworks along the way.

The infocalypse

Times have changed, but our nature hasn’t. Our gunpowder is information itself. With all of our best aspirations, we craved knowledge. People climbed the scaffold with a belief that truth is superior to everything. People gladly sacrifice their lives in the name of scientific and innovative research.

Ironically, the body of work our predecessors put together for a better world turned us into pathologic information consumers. Having stepped over the hardships of technological and informational advancements, at some point we started taking it all for granted. Much like we do nature, we assume everything around us to come naturally with one purpose – to cater to our needs/wants whatever they are. Addiction to knowledge and our constant thirst to see what’s on the other side is what makes us us. Even with the best intentions, we are putting ourselves at risk of being caught in a whirlwind we create but cannot tame. Vernor Vinge wrote a dystopian essay in 1993, projecting the ideas of man-made superintelligence imposing doom upon us. Quoting I. J. Good from the 1960s, he writes:

“It is more probable than not that, within the twentieth century, an ultraintelligent machine will be built and that it will be the last invention that man need make.”

A lot of fiction writers played with the idea of a collapsed technological society, but the common problem they have is detachment. Part of the experience that makes dystopian novels so cool is how different those humans are from us. It’s almost a prerequisite, a new version of mankind dealing with a new twisted reality. However, compared to even the ancient times, we haven’t really changed. We still commit ourselves to crazy things just because we want to prove a point or see what happens if…

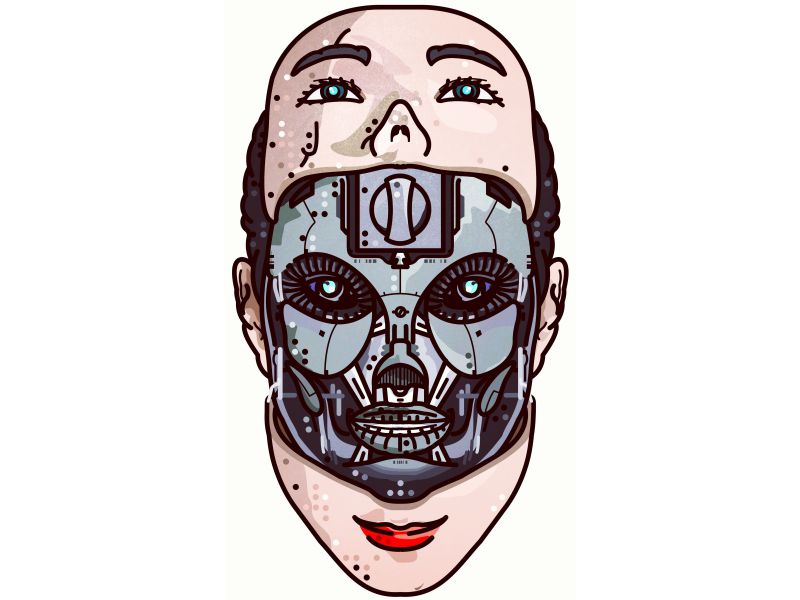

Image credit: Ben Kókolas

Our assemblage point today is technological development for the sake of development. Every year we expect new and astonishing things to pop up. The more advanced it gets, the higher the demand and the threshold for what we’d consider being sufficient.

When you think how gradual the transition was from tangible to the intangible assets, it gets scary. On top of that tangible things like gold and diamonds have never even come close to the impact information has.

The term “infocalypse” was introduced by one of the whistleblowers of the Intelligence Explosion – Aviv Ovadya. Infocalypse is the result of the evolution of technologies when they start being used to enhance or distort the reality faster than we can fathom. The infocalypse is when no one can tell where something happened or not because there is no objective indication of the truth. Truth becomes relative, almost a quantum entity.

The lines between fact and fiction become obsolete and everything depends on the quality and quantity of information you can orchestrate. Needless to say, the AI’s potential for this is immense. It’s crazy because just the fact that AI can someday potentially outsmart us in everything, shakes our integrity with no real proof whatsoever.

Ten years from 2007, we are all fucked. Propaganda and politics finally got hold of what cool kids initially built to escape the boring world. The crony uncharted territory of the internet became a battlefield of the filthiest rate ever. Trolls, bots, Cambridge Analytica, Twitter diplomacy, fake news, fake chemical attacks, manipulation, and blunt-force misinformation. Welcome to 2018. Infocalypse oozing through your iPhone X.

Image credit: Mark & Type

How did we allow this to happen? Who runs things now? How to get off the hook? Unfortunately, there seem to be no way out as our digital reality is not something you can escape. It’s all over the place. And it does not share power. This year’s Google I/O was literally fantastic. From all the AI-driven technologies to more ethics, safety, and happiness. Even though the developers’ message is clear – better world, better life, how long before things start to get weird? Already, we are facing what they call reality apathy. Tech companies pander to the idea of changing the fabric of our society. Even with the best possible intentions, we are getting more and more disconnected from the development behind these technologies.

We don’t understand how our daily digital technologies work, yet we completely rely on them. We trust them, and let them regulate us as long as someone of our kind regulates them.

This can change when AI-driven technologies start regulating themselves. As of now, we are far from having machines that can improve themselves. And frankly it’s hard to disagree with what philosophy professor John R. Searle has to say in “What Your Computer Can’t Know”:

Computers have, literally …, no intelligence, no motivation, no autonomy, and no agency. We design them to behave as if they had certain sorts of psychology, but there is no psychological reality to the corresponding processes or behavior. … The machinery has no beliefs, desires, or motivations.

The problem is, the AI doesn’t need senses, doesn’t need morale or beliefs. It operates on an entirely different field. Malice is an emotion. You can’t teach a machine to get mad at something, but even today, AI is capable of analyzing the situations that lead to human malice, repercussions of it, and mathematical consequences of the actions it brings. Computers don’t have to accompany and base their actions on emotions. Nick Bostrom in “The Future Of Human Evolution” lays the following statement:

When we create the first superintelligent entity, we might make a mistake and give it goals that lead it to annihilate humankind, assuming its enormous intellectual advantage gives it the power to do so. For example, we could mistakenly elevate a subgoal to the status of a supergoal. We tell it to solve a mathematical problem, and it complies by turning all the matter in the solar system into a giant calculating device, in the process killing the person who asked the question.

Image credit: ninebrains

The gloomiest scenario might never happen in this form, but if we react to the potential dangers, they become real. But what happens if we don’t? What if let technological development roam free? What happens when machine autonomy, which is now impossible due to the usage of open-mining conflict minerals in chips, becomes real? The term commonly used to describe the pinnacle of AI development is singularity.

Singularity

Technological Singularity is a whole doctrine revolving around the development of transhumanism up to the point where it spins out of control.

The nature of this futuristic assumption is a thin matter which means it can be carried away into the depths of human extermination theories by the divine AI, or optimistically, taken to the scenarios of renewable and solar-powered energy sources, prolonged life through human body modifications, and downloadable consciousness.

Image credit: Rich Hinchcliffe

[When] is it possible?

The prerequisite of artificial superintelligence and singularity is unlimited hardware power. No AI autonomy means a machine won’t mine coltan and cobalt in Kongo. There has to be a human being putting in physical labor in order for AI to even be possible.

Truth is, this hardware abundance is not happening, in fact, we’re plateauing which is the first time Moore’s Law is being compromised since 1965 when it was formulated. The theorem postulates that the density of transistors on an integrated circuit would double roughly every two years, doubling computing power.

The innovative leap digital technology made since the 1970s is immense, however today, we’d require 75 times more researchers to maintain that pace.

Today we have multiple hardware manufacturers constantly pushing the frontier in a cohesive way. Not a lot of corporate giants are willing to invest in breakthrough technologies and basically, bootstrap the entire industry. Instead, they invest into consumer electronics and hypothetical AI abilities. This gives us the reason to believe Singularity will not happen in the foreseeable future. The design work necessary to maintain hardware progress is something we are not really looking to revolutionize.

Put another way, we are pursuing digital while remaining analog AF.

Diggers work at the Societe Miniere de Bisunzu (SMB) coltan mine, in Rubaya, Democratic Republic of Congo. Image credit: Bloomberg

In the current state of digital industry, nothing will “wake up” and start methodically improving itself. But this doesn’t mean it is impossible.

For the last couple years, the rise of the blockchain technologies reshaped economic reality on a planetary scale. There hasn’t been a supercomputer built specifically to make the Bitcoin runaway possible. What made it possible is the new angle from which we saw the abundance of computational resource available at our hands. I venture to suggest that a hive of less smart and hardware-dependent AIs with machine learning capabilities available today, could seriously shake us up. Mainly because there is no reference to what happens and no instruction to follow when shutting down the AI by pulling the plug won’t be enough.

Reality check

When we think of advanced AI, we either flip out on how creepy that is or see how we could benefit from it. We never stay unaffected.

Truth is, we change with that technology and every time we accomplish a task using it or pass the information we gained through it, we beat our yesterday’s selves.

Vernor Vinge prophetically called this phenomena Intelligence Amplification (IA). IA is an easier and more familiar road to take. Instead of building up AI, we could focus more on how the technology can be helpful for us a secondary means, first being our own abilities, knowledge, and experience.

IA could possibly help us with:

- Architecture. The power of AI calculations can provide safety and mixed with the intuition of a human, can create a valuable and actionable resource of knowledge for construction.

- Art. With the amount of analyzable data on our aesthetic comprehension, artists can use AI to save time for what matters – creation, while leaving the footwork to the machines.

- Datsusara. In Japanese, this means “escaping wage slavery”. You can still provide valuable work without being tied to a desk.

- Prosthetics. Artificial limbs that can operate with human neural networks with no lag and give in performance are yet to be developed. If machine learning is capable of eradicating all living things, can it handle a couple thousand daily motions of a human limb?

Image credit: Aleksandar Savic

We all want to be a better version of ourselves. We want to be efficient and save more time for cool stuff. Our urgency to do more faster is rooted in the impermanence of life itself. All we know is operating in our dimensions. Thus, everything we’ve ever created is dictated by our own vision of life.

The philosophical issues the internal life would raise can’t even be grasped from our today’s perspective. In order to avoid a loop, our immortal minds would have to keep improving and this will lead to versioning. How would you look at your own older version? Will it ultimately lead to perfection and kill diversity?

The state of eagerness is in our nature, luckily we are limited by our environment and brain capacity. We are also our own watchdogs. We put to doubt everything we create and still go to battle for whatever we believe in. We know how to use the technology but in our sensitivity, we can be used by it. We believe in the good and call it God, and more often than not, the Universe is good to us to. We put our lives into honest attempts to make a better world and don’t look back. We make a better world. All we can hope is ethics becomes the attribute of a better world before Technological Singularity does.